Design Thinking for AI MVPs: 5 Stages to Product-Market Fit

MVP

16 mins

Dec 16, 2025

Khyati Mehra

Building a product in a vacuum and hoping for market adoption is a direct route to failure, particularly given the capital-intensive nature of AI development. This is precisely where the design thinking process provides its strategic value. It's not a nebulous creative exercise but a battle-tested, systematic framework for identifying and solving high-value problems.

At its core, design thinking is a human-centered methodology for innovation. It grounds every strategic decision from feature prioritization to market positioning—in a deep, analytical understanding of the end-user. For founders and investors, this process is a critical tool for de-risking a venture, ensuring capital is deployed against validated market needs, not just technological possibilities.

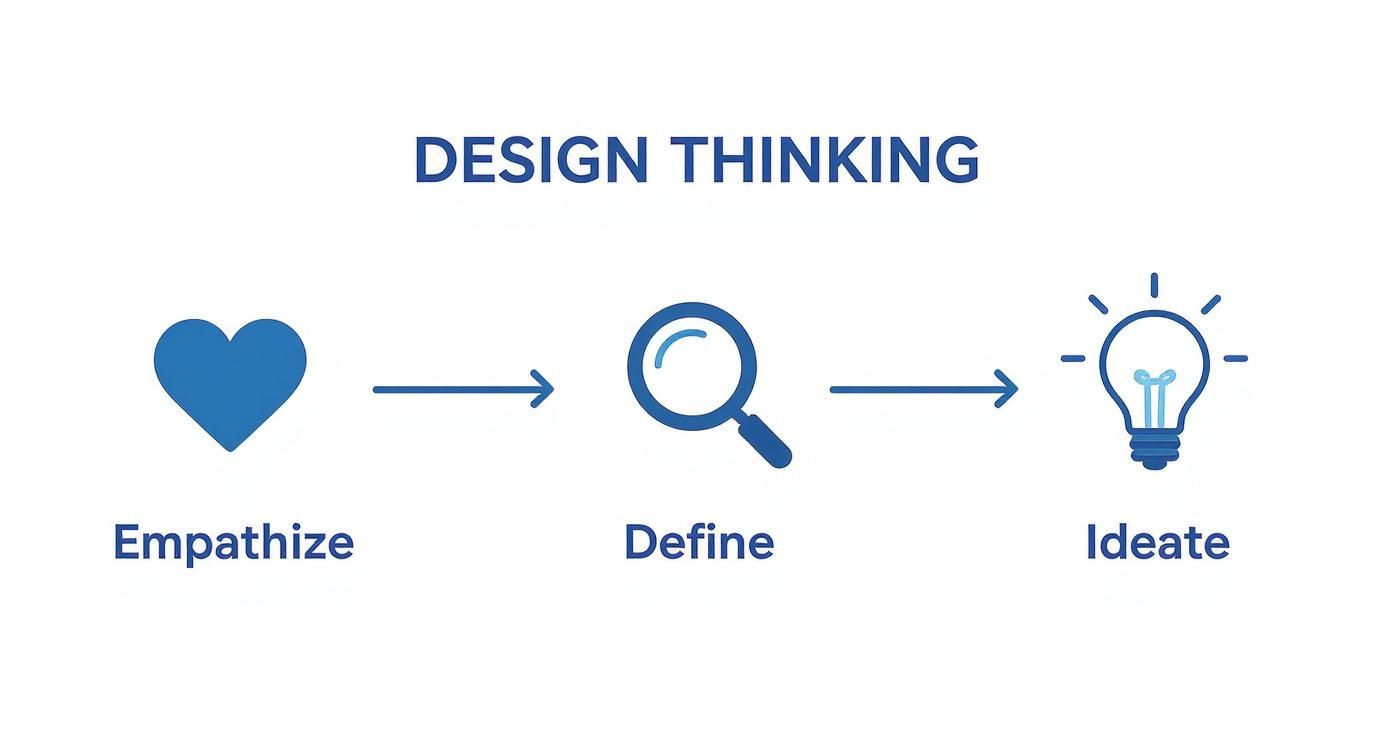

This iterative framework is structured around five distinct yet interconnected stages:

Empathize: Move beyond assumptions and immerse your team in the user’s operational reality to understand their pain points, objectives, and latent needs.

Define: Synthesize qualitative data from the empathy phase into a concise, actionable problem statement that will serve as the strategic focus for the entire project.

Ideate: Engage in divergent thinking to generate a broad spectrum of potential solutions, challenging conventional wisdom and exploring novel applications of technology.

Prototype: Translate abstract concepts into tangible, low-fidelity artifacts that allow for rapid, inexpensive testing of core assumptions.

Test: Validate prototypes with real users to gather empirical feedback, identify flaws in your logic, and generate insights for the next iteration.

An Iterative Loop, Not a Linear Path

Viewing these five stages as a simple checklist is a common but critical error. The strategic power of design thinking is rooted in its iterative, non-linear nature. It’s a continuous feedback loop.

Insights gained during the Test phase almost invariably compel a return to the Define or Ideate stages. This cycle of hypothesis, experiment, and data-driven refinement is what prevents the launch of a high-cost, low-impact product.

The objective is to fail early and inexpensively at the prototype stage, not late and catastrophically post-launch. In the high-stakes environment of AI MVP development, this iterative loop is your most significant competitive advantage.

This is not just academic theory; it's a proven operational model. A study by Forrester found that design-led firms report 1.5x greater market share. Companies that rigorously apply these principles see significant acceleration in time-to-market and higher project success rates. The business case is further detailed by the Interaction Design Foundation.

For an AI startup seeking venture capital, demonstrating a disciplined, user-centric validation process is a powerful signal. It communicates to investors that you are mitigating risk and are rigorously focused on achieving product-market fit.

The Five Stages of Design Thinking: A Strategic Overview

Stage | Core Objective | Key Deliverable | Strategic Value for AI MVPs |

|---|---|---|---|

Empathize | Uncover latent user needs and contextual pain points through deep qualitative research. | User personas, journey maps, ethnographic field notes. | Identifies high-value problems that can be uniquely solved with AI. |

Define | Synthesize research into a precise, actionable problem statement. | A "How Might We..." (HMW) question or a Point of View (POV) statement. | Aligns the entire team on a specific, validated target for development. |

Ideate | Generate a wide array of potential solutions through structured brainstorming. | A prioritized feature backlog, solution storyboards, concept sketches. | Explores novel applications of AI beyond obvious, incremental improvements. |

Prototype | Create low-fidelity, testable artifacts of core concepts. | Wireframes (Figma), interactive mockups (Framer), or functional no-code MVPs (Bubble). | Enables rapid, low-cost validation of both UI/UX and core AI logic. |

Test | Gather empirical user feedback to validate or invalidate core assumptions. | Usability reports, validated learning summaries, iteration backlog. | Provides quantitative and qualitative data to guide the next development cycle. |

Each stage builds upon the last, creating a powerful feedback loop that systematically moves a concept closer to a product with demonstrable market demand.

A Deep Dive Into the Five Stages of Design Thinking

To transform the design thinking framework from a corporate buzzword into a high-impact tool for AI product development, one must execute each stage with analytical rigor. For founders and product leaders, this isn't about procedural compliance. It's a disciplined strategy to de-risk investment and validate market demand before committing significant engineering resources.

The process is not linear but cyclical. Learnings from later stages frequently necessitate a return to earlier ones, forcing a critical re-evaluation of core assumptions. This iterative feedback loop is the engine of genuine innovation.

This diagram illustrates the initial flow, beginning with human-centered research and progressing toward concrete solution ideation.

It underscores the journey from qualitative insight (Empathize) to problem clarification (Define) and, ultimately, solution brainstorming (Ideate). This groundwork is foundational for all subsequent development.

Stage 1: Empathize

The Empathize stage is the bedrock of the entire process, moving far beyond traditional market research that merely captures stated preferences. The objective is to understand what users actually do, think, and feel within their operational context. For an AI MVP, this is where you uncover the unspoken needs and workflow inefficiencies that a sophisticated algorithm can uniquely address.

This stage demands immersive, qualitative research methodologies. The goal is not statistical significance but deep contextual insight.

Ethnographic Field Studies: Observe users in their native environment. If building an AI tool for financial analysts, this means being physically or virtually present in their workspace, observing their workflows, and identifying the repetitive, low-value tasks they’ve normalized.

In-Depth Interviews: Conduct semi-structured conversations, not rigid Q&As. Employ techniques like the "Five Whys" to move beyond surface-level responses and uncover the core motivations and frustrations driving behavior.

Immersion and Contextual Inquiry: Directly use the tools and perform the processes your target users do. This first-hand experience builds genuine empathy and illuminates pain points users may be unable to articulate.

A common pitfall is confusing sympathy (feeling sorry for a user's problem) with empathy (understanding their worldview so deeply you can anticipate their needs). For an AI startup, this distinction is critical—it’s the difference between building a novel but irrelevant feature and a tool that becomes indispensable to their workflow.

Stage 2: Define

After accumulating a wealth of qualitative data in the Empathize stage, the Define stage focuses on synthesis—finding the signal within the noise. Here, you distill your observations into a precise, actionable problem statement. A poorly defined problem guarantees a misaligned solution, regardless of its technical elegance.

This is not merely about cataloging user complaints. It's about framing the core challenge from the user’s perspective. This statement becomes the strategic North Star for the project.

The goal is to formulate a problem statement specific enough to guide the team, yet broad enough to permit creative, non-obvious solutions.

Effective tools for this stage include:

Point of View (POV) Statements: This structured format clarifies the user, their need, and the underlying insight. It follows the template: [User] needs to [user's need] because [surprising insight]. For example: "A time-constrained marketing manager needs to automate social media performance reporting because they value strategic analysis but are consumed by manual data compilation."

"How Might We" (HMW) Questions: These transform POV statements into generative, open-ended questions that fuel ideation. The above example becomes: "How might we empower marketing managers to derive strategic insights from social media data without the burden of manual compilation?"

For any AI startup, this stage is a crucial checkpoint. Before a single model is trained, the entire team—from data scientists to investors—must be aligned on the exact problem being solved. A well-crafted HMW statement ensures this alignment and prevents costly scope creep.

Stage 3: Ideate

With a clearly defined problem, the Ideate stage begins. This is where your team generates a high volume of potential solutions through divergent thinking. The initial focus is on quantity over quality, as the most innovative breakthroughs often emerge from the periphery of conventional thinking.

Effective ideation is not an unstructured free-for-all; it relies on structured techniques to push beyond ingrained assumptions.

SCAMPER Method: A systematic checklist for innovating on existing ideas: Substitute, Combine, Adapt, Modify, Put to another use, Eliminate, Reverse.

Worst Possible Idea: This counterintuitive technique reduces performance anxiety. By intentionally brainstorming terrible solutions, teams break down creative barriers. Often, inverting a "bad" idea reveals the kernel of a brilliant one.

Analogous Brainstorming: Analyze how different industries solve structurally similar problems. How does Amazon's logistics network optimize delivery routes? How do AAA games onboard new players? These analogies can spark truly novel concepts for your AI product.

For teams building AI prototypes for VCs, this stage connects a deep user problem to a compelling technical solution. The output is a portfolio of ideas that can then be filtered through frameworks like desirability, feasibility, and viability to determine which concepts merit prototyping.

Stage 4: Prototype

The Prototype stage transforms abstract ideas into tangible artifacts. The objective is not to build a production-ready product but to create low-cost, low-fidelity versions of a concept to test assumptions and gather feedback. For AI MVPs, prototyping is the most effective mechanism for risk reduction.

The Stanford d.school’s influential methodology is built around this iterative cycle. Prototyping allows designers to create quick, inexpensive models, leading to over 70% of tested concepts being tweaked or thrown out early on—saving a ton of money and time. It's no surprise that organizations that get this right are reportedly 1.5 times more likely to actually meet customer needs. You can learn more about the roots of this approach by reading about the evolution of design thinking methodologies.

Prototype fidelity should increase as confidence in the core concept grows:

Low-Fidelity Prototypes: These are rapid and inexpensive artifacts like paper sketches, storyboards, or basic clickable wireframes created in tools like Figma. Their purpose is to test the core value proposition and user flow. Is the concept understandable? Is the workflow intuitive?

High-Fidelity Prototypes: Once the core concept is validated, fidelity increases. These are interactive mockups that closely resemble the final product's look and feel, often built with tools like Framer. For AI products, a "Wizard of Oz" prototype—where a human manually simulates the AI’s output—can be used to test user interaction and value before a single algorithm is developed.

This stage forces a confrontation with implementation details. It's one thing to conceptualize an "AI-powered dashboard," but designing the specific inputs, outputs, and interactions that deliver tangible value is another challenge entirely.

Stage 5: Test

The final stage, Test, is where hypotheses meet reality. You put your prototypes in front of real users. This is not a sales demonstration; it's a scientific experiment designed to validate or invalidate key assumptions. Your role is to observe, listen, and learn with an open mind, actively seeking the critical feedback that exposes your blind spots.

To elicit candid, actionable insights, tests must be well-structured.

Define Clear Learning Objectives: What specific hypothesis are you testing? For example: "We believe users will trust AI-generated recommendations if we provide transparent access to the underlying data sources."

Observe Behavior, Not Opinions: Avoid leading questions like, "Do you find this easy to use?" Instead, assign a specific task and observe their behavior. Pay close attention to points of hesitation, confusion, or delight.

Encourage Radical Candor: Create a safe environment for critical feedback. Phrases like, "You cannot break this, and you won't hurt our feelings—we need your honest critique," can elicit more genuine responses.

The output of the Test stage is not a simple pass/fail grade. It's a rich dataset of validated learnings that directly informs the next iteration of the design loop. Receiving negative feedback at this stage is a significant win—it represents a low-cost lesson that prevents a high-cost market failure. This cycle of building, testing, and learning is the engine of product innovation.

When Should You Actually Use Design Thinking?

Understanding the what of design thinking is necessary but insufficient. Knowing when to deploy it is what distinguishes innovative teams from those merely executing tasks.

This framework is not a panacea for every business challenge. It delivers maximum value when navigating high levels of ambiguity and developing solutions rooted in complex human needs. For founders and product leaders, deploying it is a strategic decision—best suited for exploring uncharted territory, not optimizing well-understood processes.

Finding the Right Moments for a Design Thinking Cycle

Certain business challenges are ideally suited for this human-centered approach. Applying it in these high-impact scenarios provides a significant competitive advantage and de-risks ventures before substantial capital is committed to building an AI MVP.

Initiate a design thinking cycle when you are:

Entering a New Market: When targeting a new industry or customer segment, your internal assumptions are almost certainly flawed. Design thinking compels you to build strategy from the ground up, based on direct user evidence, not aggregated market reports.

Developing a Novel AI Application: Instead of starting with, "What is technically possible with our LLM?" design thinking forces the question, "What critical user problem can we solve in a fundamentally new way with AI?" This prevents the creation of technologically impressive but commercially non-viable products.

Struggling to Achieve Product-Market Fit: If user engagement is stagnant or churn is high, it signals a disconnect between your product and user needs. The framework provides a structured methodology to diagnose the root cause of this misalignment by returning to the user's core pains and objectives.

How It Plays with Lean and Agile

A common misconception is that design thinking conflicts with frameworks like Lean Startup or Agile. In reality, they form a powerful, complementary triad for modern product development, each addressing a critical strategic question.

Design Thinking asks: Are we solving the right problem? (Desirability)

Lean Startup asks: Should we even build this business? (Viability)

Agile asks: How can we build this thing efficiently? (Feasibility)

Consider it a strategic relay race. Design thinking runs the first leg, exploring the ambiguous problem space and handing off a validated concept—a "what" supported by deep user insight. That validated prototype, along with its user stories, is then passed to an Agile team for efficient, iterative execution.

The Lean Startup's "build-measure-learn" loop acts as the overarching strategic framework, ensuring the entire endeavor is directed toward building a sustainable, scalable business.

A clear product vision, shaped by this integrated approach, is non-negotiable for a successful launch. When you map out a comprehensive AI strategy, you’re weaving user needs together with technical execution and business goals. It's how you ensure you don't just build the product right, but that you’re building the right product from day one.

A Prototyping Tool Selection Framework

The choice of tool should be driven by the learning objective. A clickable mockup is sufficient for testing a user flow, but validating the performance of an AI model requires a more robust, functional prototype. Use this framework to guide your decision.

Tool (e.g., Figma, Framer, Bubble) | Fidelity Level | Primary Use Case (UI/UX vs. Functional Logic) | Technical Skill Required |

|---|---|---|---|

Figma | Low to High (Visual) | UI/UX Design & Clickable Prototypes | Low |

Framer | High (Interactive) | UI/UX with Realistic Animations & Transitions | Low to Medium |

Bubble | High (Functional) | Functional Logic & Database Interactions | Medium |

An optimal workflow combines these tools flexibly. Begin with Figma to validate the user experience, then transition to a platform like Bubble to build a functional prototype that proves your AI concept delivers tangible value. This layered approach is the fastest path to market validation.

Common Pitfalls and How to Avoid Them

Even the most robust frameworks are only as effective as the teams implementing them. For founders and VCs focused on AI product acceleration, understanding where the design thinking process can fail is as crucial as knowing the steps themselves. Execution risk is significant, but these common traps are avoidable with discipline.

The process has been refined for decades, with early ideas from thinkers like Herbert Simon in 1969 evolving into the human-centered methodology championed by IDEO and Stanford’s d.school. Today, it’s a disciplined way to make sure what you’re building actually connects with real user needs. You can learn more about the history of this influential methodology.

Successfully navigating the design thinking process means being honest enough to avoid these critical mistakes.

Mistaking Assumptions for Empathy

This is the most frequent and fatal error, occurring at the outset. Teams conduct a few surveys, interview a handful of friendly customers, and mistake this superficial data gathering for genuine empathy. They proceed to build a product based on their own assumptions, not validated user needs.

Execution Pitfall: Relying on what users say they want instead of observing what they actually do. This is the fastest path to building a feature no one uses.

How to Avoid It:

Employ Ethnographic Methods: Don't just interview users—observe them in their native workflow. For AI MVP development, this means watching them perform manual tasks to identify the latent pain points your algorithm can solve. An hour of observation yields more insight than a week of surveys.

Engage with Extreme Users: Interview your most sophisticated power users and your most frustrated novice users. Their perspectives are outliers that reveal critical flaws and opportunities that the "average" user will not.

Defining the Problem Too Broadly or Too Narrowly

An improperly framed problem statement acts like a faulty GPS, sending the entire project in the wrong direction.

If the statement is too broad ("How might we improve logistics?"), the team lacks focus and direction. If it's too narrow ("How might we add a shipment tracking button?"), you have prematurely jumped to a solution, foreclosing other, potentially more innovative possibilities.

How to Avoid It:

Anchor in the User's Point of View: A strong problem statement is always centered on the user. It synthesizes their role, their core need, and the insight you've uncovered. For example: "A junior financial analyst needs to automate quarterly report generation because manual data aggregation is preventing them from focusing on high-value strategic analysis."

Frame as a "How Might We" Question: This simple linguistic shift transforms a problem into an optimistic, open-ended challenge. It invites creative ideation rather than incremental fixes.

Falling in Love with the First Prototype

This is a classic cognitive bias. After investing effort to create a tangible artifact, founders become emotionally attached. This "sunk cost" fallacy makes it difficult to objectively process negative feedback during testing. The team begins defending the prototype instead of learning from it.

For teams building AI prototypes for VCs, this inability to pivot based on market feedback is a major red flag.

How to Avoid It:

Prototype to Learn, Not to Validate: Reframe the objective. The purpose of an early prototype is to discover its flaws, not to confirm your initial brilliance. Treat user tests as experiments designed to falsify your hypotheses.

Maintain Low Fidelity: Build the cheapest, fastest possible version of the idea. Use paper, simple wireframing tools, or other rapid methods. The less you invest in time, cost, and ego, the easier it will be to discard a flawed concept and iterate.

Expert AI startup services can provide a disciplined, objective third-party perspective. A skilled partner ensures the process yields market-validated insights, not just a confirmation of pre-existing biases.

How We Apply Design Thinking to AI MVP Development

Theory is irrelevant without execution that drives ROI. At our studio, the five stages of design thinking are not an academic exercise but a disciplined, repeatable system for de-risking AI MVP development. We leverage this framework to ensure the products we build are not only technologically advanced but solve a validated, high-value market need.

Consider a recent engagement with a startup aiming to build an AI-powered sales forecasting tool. The founding team was convinced the market needed more complex, sophisticated predictive algorithms.

From Flawed Assumptions to Validated Solutions

Instead of immediately architecting a solution, we began with deep empathy interviews and contextual inquiry. We didn't ask what features they wanted; we observed their teams' end-of-quarter workflows. The critical insight emerged quickly: the primary bottleneck was not a lack of predictive accuracy but the laborious, manual effort required to clean and consolidate data from disparate sources before any analysis could begin.

This insight completely reframed the problem. The "How Might We" question shifted from "How might we predict sales more accurately?" to "How might we eliminate the manual drudgery of sales data preparation?" This pivot, derived directly from the Empathize and Define stages, prevented months of misdirected engineering effort.

We then moved to rapid ideation and prototyping, quickly building a functional no-code prototype that automated data ingestion and cleaning from key CRM and ERP systems. This was not a visual mockup; it was a working tool that demonstrated immediate, quantifiable value.

When we tested this prototype with sales operations teams, the feedback was unequivocal. The tool addressed a far more urgent and costly pain point than predictive analytics. A solution that could save each team member 10+ hours per week was a clear must-have. This validation provided their investor pitch with undeniable evidence of product-market fit.

This entire cycle—from initial discovery to a validated, functional prototype—was completed in weeks, not months. You can explore this lean methodology further in our analytical guide to building an MVP for AI startups.

This is the tangible power of a rigorously applied what is design thinking process. It focuses capital and resources on solving the right problems, leading directly to a market-ready product that users adopt and investors back. If you need to translate your AI vision into a validated MVP with speed and precision, let’s discuss how our AI startup services can help.

Got Questions About Design Thinking? We've Got Answers.

We understand. As a leader focused on building a scalable venture, you need to know how theory translates into execution and market impact. Here are answers to the most common questions we receive from founders and product executives about implementing design thinking.

How Does This Fit in with Our Agile Sprints?

Design thinking and Agile are not conflicting methodologies; they are complementary parts of a modern product development lifecycle. They don't compete; they complete each other.

Design thinking addresses the "why" and the "what." It is the strategic, upfront discovery work to ensure you are solving a real problem for a real user. It de-risks the product concept itself, ensuring you are building the right thing.

Agile addresses the "how." It takes the validated concepts and user stories generated during design thinking and translates them into shippable code in an efficient, iterative manner. It is a framework for building the thing right. The output of the former becomes the input for the latter, creating a seamless handoff from strategy to execution.

Isn't This Just for Consumer Apps?

Absolutely not. While design thinking gained prominence with consumer-facing products, its application is arguably more critical in complex B2B and enterprise software environments.

The "user" in a B2B context is rarely a single individual. It's an ecosystem of stakeholders: the economic buyer, the end-user, the IT administrator, the compliance officer—all with distinct and often conflicting needs. The Empathize stage is a powerful tool for mapping this stakeholder ecosystem, uncovering the intricate workflow pains and organizational challenges that, when solved, create immense and defensible enterprise value.

How Do We Actually Measure Success Here?

Measuring the ROI of design thinking is not about a single KPI but a set of evolving metrics that align with each stage of the process.

Early Stages (Empathize, Define): Success is measured qualitatively. Is the clarity of your user insights increasing? Is your problem statement becoming more precise and actionable? This is your leading indicator of progress.

Later Stages (Prototype, Test): Success becomes quantifiable. You can measure task success rates, time-on-task, user satisfaction scores (e.g., SUS), or the number of validated learnings per iteration.

Ultimately, the true business impact is reflected in lagging indicators: increased user adoption and retention, reduced customer acquisition cost (CAC) through better product-market fit, and a faster, more capital-efficient path to revenue.

Ready to move from assumption-based development to building a product with validated market demand? The team at Magic specializes in this process, helping founders accelerate their AI MVP development by building on a foundation of deep customer insight. Let's build your AI-powered MVP.

Ready to dive deeper? Download our exclusive AI MVP Development Canvas—a step-by-step guide to applying these principles to your own venture.